Who sends more emails in a month than Amazon?

Perhaps many brands… but the company targets audiences worldwide and locally. “At Amazon, we’re lucky to have a lot of subscribers to test our emails,” said Katie Seegers, Email marketing manager for Amazon local. Luckily, the company is willing to share the wealth when it comes to email best practices.

In her ClickZ Live SF presentation last week, Katie made it a point to distill what she’s learned from the brand’s large following into tips and tricks any marketer can use for better email engagement.

Here are her four rules to email strategies for more opens, clicks and long-term revenue.

1. Test everything, test frequently

Want to alter the call to action button in an email? Test two variations. Curious about the effect of a long versus short subject line? Test, test, test. All decisions should come from data, Katie shared. She also highlighted that email is a very dynamic medium — and subscribers can be fickle.

“Sometimes, it might come down to keeping it fresh for people. Don’t get complacent with results,” Katie said.

“In one case we tested two subject line variants,” she explained:

- Variant A: Extended with a lot of features (Think: “New carwash deals in your town: 50% off.”

- Variant B: Very short and direct.” (Think: “Carwash deals.”)

- Results in month 1: The shorter subject line had the higher open rates, so it became the “winner” and approach for subsequent messages.

- Effects: Open rates began to level-off to pre-experiment rates within one month.

- Retest with Variant A and Variant B.

- Result in month 2: Amazon saw this time longer subject lines had more impact.

“Sometimes, it might come down to keeping it fresh for people. Don’t get complacent with results,” Katie said.

More, consider every change an opportunity to get data on what users want. She explained a time when Amazon wanted to add a location tool to show how close or far a subscriber lived from a company putting out an offer. The change seemed like a no-brainer: Simple and beneficial. But when they tested, they found click-through rates went down. “People may have been less likely to click if they saw the deal was not as close as they would have hoped. It was a positive user learning experience.”

Ultimtely, Katie’s team found the feature impacted clicks, but did not negatively impact revenue predictions. They opted to keep it in, even at the cost of clicks, to provide more value to the user upfront. But it was a key lesson in the value of proximity.

2. Plan experiments thoughtfully

Running an experiment is almost a science. Too many variables will make it hard to track what causes an uptick. “Only focus on the changes that are going to impact the result you want. Don’t clutter the email with uneccessary features,” Kate said.

Here are some of her tenets of email experimentation:

- A/B test with limited bias by segmenting according to gender, age, location, occupation.

- Determine what volume or percentage of suscribers should be included in a test based on confidence in the hypothesis.

Big change with unpredictable results? Keep it to about one-quarter of subscribers (or even a bit less).

Smaller change with a stronger bet? Go for up to 50 percent of subscribers.

- Limit to one (small) variable within a single test to track what causes the upticks.

3. Measure precisely

Don’t make major changes based on disproportionate research. Make sure you give experiments ENOUGH TIME to run, and only count SIGNIFICANT DATA. Case in point: The difference between a 15.7% open rate and a 15.1% open rate – whether it’s a difference of four clicks or 400 clicks – isn’t statistically significant.

Don’t make marketing guesses, make decisions with data.

If you’re not getting results expected (or results at all), instead of jumping to conclusions:

- Extend the length of the test

- Add more subscribers

- Tweak the variables

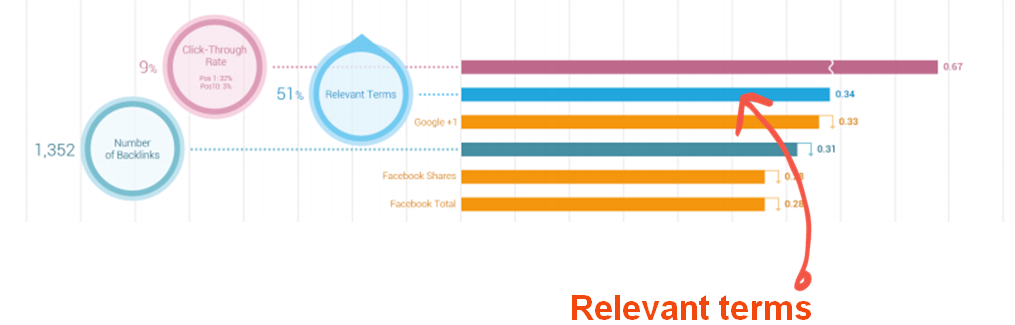

Which data point tends to map most closely to revenue success? Katie says it’s usually click-through rates.

“Reducing emails can feel risky, but cutting back based on DISinterest signals improved deliverability and revenue.”

4. Use data to personalize content

Once email test results are in, make sure to act on them. Copy length, offer types, educational materials, etc. can all be coordinated against data that signals it’s right for various segments of subscribers. These include clicks, opens and email-based purchases.

But Katie suggests taking it one step further:

“Plan sends not only according to email-identifiable metrics, like open rates, clicks and other engagement factors, but also according to user demographics and shopping propensity models,” Katie said.

On that note, she also advised marketers to pull the plug (temporarily) on people who aren’t engaging.

“Reducing emails can feel risky, but cutting back based on DISinterest signals improved deliverability and revenue,” she said. Instead, only send to those contacts when you have something dramatically new to offer that maps to their propensity models.

What’s next for email marketing?

Katie said her team is focusing on mobile, and recommends marketers prepare for optimal mobile email experiences, as well. “Amazon doesn’t have a magic answer for the best way to create mobile layouts… we know matching the experience of the email with the content behind the click is essential [for readers on any device]. And we know mobile is an invital focus area.”

Check out more Brafton coverage of ClickZ Live!