Marketers unanimously shudder at the idea of publishing duplicate content. It’s well-known throughout the SEO world that publishing scraped or copied web content is a punishable practice that can cost brands’ entire sites visibility in search results. However, there have been numerous updates that indicate Google is softening its stance on duplicate content penalties. This doesn’t give brands the green light to replicate materials as a way to build bigger sites, but it suggests Google has nailed down a process for spotting and eradicating blatant black-hat practices.

Newsflash: Not all duplicate content is spam

“Duplicate content does happen,” Cutts said in the latest Webmaster Help Channel video, adding that around one-third to one-quarter of the web is made up of duplicate content.

The duplicate content rule can’t be a black-and-white matter because it would make it impossible for writers to quote one another or pull from existing research to curate fresh pieces.

“It’s not the case that every single time there’s duplicate content, it’s spam. And if we made that assumption, the changes that happened as a result would probably hurt our search quality rather than help our search quality,” Cutts explained.

… But some is

That being said, Cutts pointed out that, “If you do nothing but duplicate content and you’re doing it in an abusive, deceptive, malicious or deceptive way, we do reserve the right to treat action on spam.”

“We do reserve the right to treat action on spam.” – Matt Cutts

Like much of Google’s guidance, this update seems to suggest marketers can’t trust hard-and-fast SEO rules to win visibility online. By blurring the lines, the search engine is making it harder for companies to optimize on a basic level. Marketers must use their best judgement and consider what value their blog articles, social posts and pictures offer site visitors. From this baseline, they can figure out where to improve.

Duplicate content not always penalized, but less visible

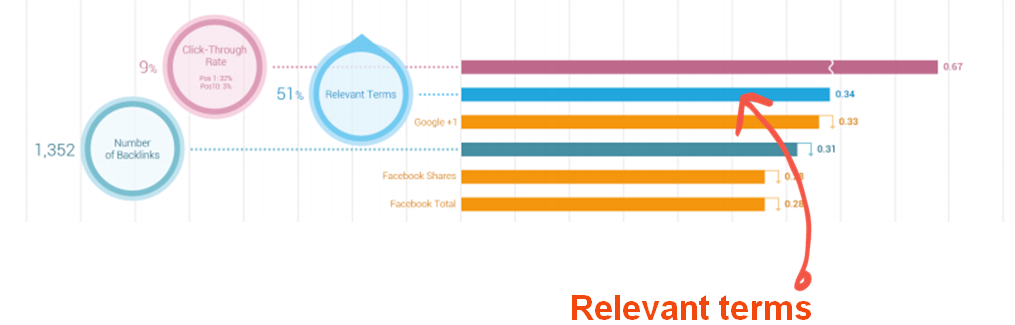

Although duplicate web content may not be punishable in the way that marketers thought, it won’t give them prominence in SERPs. Cutts reported that Google doesn’t automatically penalize sites publishing replicated information, but they probably won’t show up at the top of results pages for those key terms.

Around one-third to one-quarter of the web is made up of duplicate content.

When search crawlers see that two pages contain the same information, they choose one to surface at the top of results and push the other to the bottom. It’s safe to assume the page that gets the most exposure will be the one considered to be more relevant and valuable for users.