Since the demise of keyword-dependent SEO strategies, traffic has been one of the primary goals of content marketers. There’s no denying businesses reach more potential customers when they get more eyes on blogs and news content, but overall clicks don’t tell the whole story. As Brafton reported, companies can get big traffic numbers without seeing a concurrent rise in conversions. That’s why it may be a good idea to take a subjective look at websites – in fact, even Google does it.

Who watches the spam-watchers?

In Matt Cutts’ latest Webmasters video, he answered a question about algorithmic decisions. Specifically, he was asked how the search engine decides between two different iterations of the same algorithm, and his answer sheds further light on how Google qualitatively and quantitatively judges the value of websites and web content.

He revealed that judging the strengths of different algorithms relies on both subjective ratings from human analysts, as well as numerical metrics based on certain factors, such as clicks. The qualitative judges are on the lookout for SERPs containing content relevance, overall page quality and the source’s reputation, and while total numbers of clicks helps paint a picture, Cutts cautioned that sometimes, quantitative measurements don’t capture the whole story, and many users don’t recognize spam until after they’ve already clicked on it.

“For example, in webspam, people love to click on spam, and so sometimes, our metrics look a little bit worse, because people click on the spam,” he said. “Therefore, it looks like people don’t like the new algorithm as much, so you have to take all those ratings with a little bit of a grain of salt.”

Content needs editorial strength, and even the sites that manage to get past the Webspam team aren’t going to do very much for a company’s ledger.

When empirical judgements fail

Taking this revelation about Google’s own anti-spam efforts into account, how can web marketing be conducted to determine if websites are too spammy? High traffic with low conversion rates often indicate something is amiss in the sales pipeline. And spam, by its very nature, is designed to get users to click, but it won’t offer them any real value. Content needs editorial strength, and even the sites that manage to get past the Webspam team aren’t going to do very much for a company’s ledger.

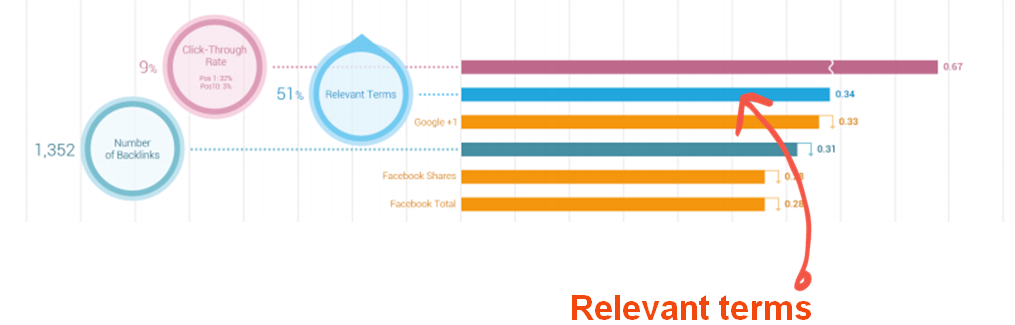

As Brafton reported, stronger content can receive “editorial votes” from other websites in the form of links, but those will only be forthcoming when there’s high quality media and resources on a page. At the end of the day, even Google has to rely on actual humans. So far, no one has invented a perfect program to judge the quality of written content. Even if algorithms are telling engineers websites deserve to rank highly, there still need to be periodic qualitative audits that shape how search engines function.