Google has a rolled out a new search feature that informs users when a site has blocked Googlebot from crawling a page or page elements since there is no rich snippets information for SERPs. Previously, Googlebot would show page descriptions based on the limited data available regarding the page, and the result typically reads awkwardly or inaccurately assesses the page’s content. For marketers, the adjustment could provide more motivation to avoid blocking Googlebot on some pages to catch more SEO clicks.

On SERPs, users will now see, “A description for this result is not available because of this site’s robots.txt – learn more.” The “learn more” text directs users to a page that explains why some sites opt to block Googlebot and explanations for marketers and webmasters describing the ways to block or unblock the search crawler.

The message could also serve to alert webmasters of pages on their sites that are blocked, which they may not have meant to block.

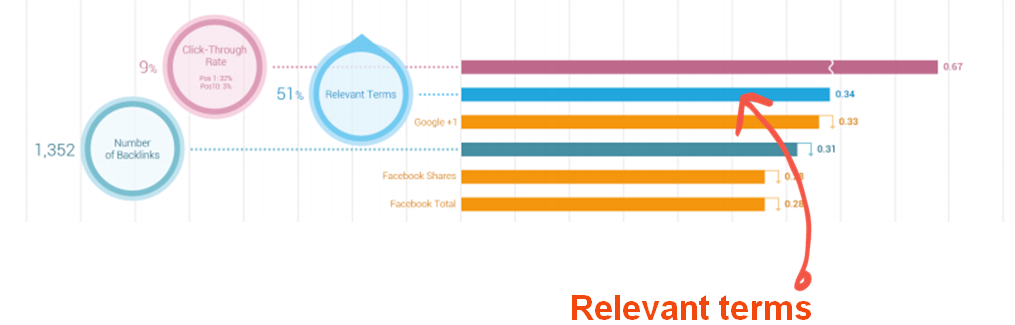

As SEO evolves and Google urges companies to make their sites as user-friendly as possible, the move prevents inaccurate descriptions from misleading users. Improving overall accuracy of search results is always a goal for Google. The company reported on its Inside Search blog that it has more actively attempted to use synonyms for keywords as ranking signals. In June, Google rolled out five different changes to its algorithms and ranking signals aimed at synonyms.