It was predicted the latest version of the Penguin algorithm would be the most-talked – about Google search ranking update of the year, according to Brafton’s inforaphic. Affecting 2.3 percent of all English queries, it makes sense the spam-fighting technology would ruffle a few feathers. And it did. Numerous webmasters are still recoiling from the late-May release of Penguin 2.0, and some are still looking for guidance about where they went wrong and how they can bring their search engine marketing practices back to full force.

Google’s Webspam team has provided professionals with some guidance about next steps, but the search engine seems to stand by the old idiom that it’s better to teach a person how to do something for him or herself than provide the end-result on one occasion. In the most recent Webmaster Help Channel video, Search Engineer Matt Cutts says publishers will need to do some research on their own to find examples of bad links on their domains.

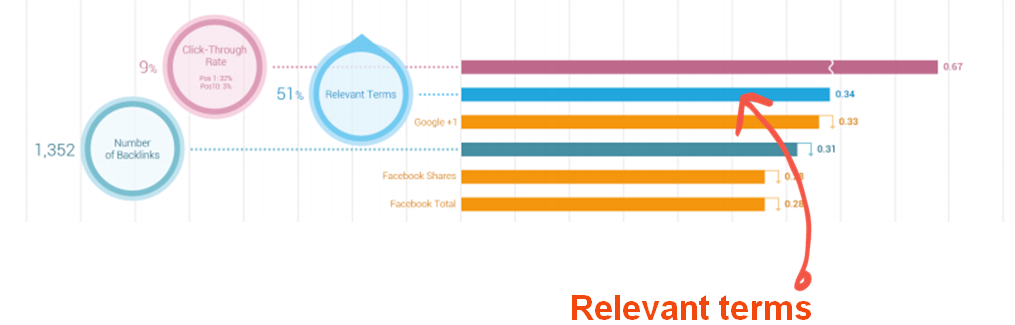

“We’re working on becoming more transparent and giving more examples with messages as we can,” Cutts states. Until then, webmasters will need to practice due diligence by reviewing their backlink profiles, contacting webmasters and consulting content marketing specialists to get to the root of their SEO problems.

“I wouldn’t try to say ‘Hey, give me more examples’ in a reconsideration request,” Cutts adds. For one, because the number of responses Parsers can offer are limited, but also because this isn’t necessarily the appropriate forum to do research about links.

Instead, Cutts advises confused SEOs to stop by the Webmaster Forum and see if there are any Googlers around who can provide additional context about the errors from particular spam incidents.